A quick look at the past…

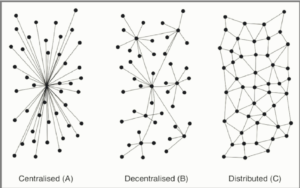

Quite some time ago, software vendors promoted a centralized hub-and-spoke middleware product for Enterprise Application Integration: the EAI broker (A).

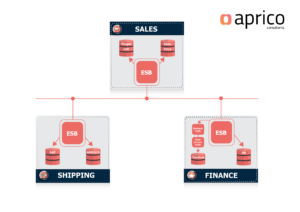

Then SOA came along, and the tool of choice became the ESB.

But in many cases the ‘B’ was for ‘Broker’ instead of ‘Bus’, as most vendors just face-lifted their EAI products. It took another while to see a new kind of decentralized version of the ESB (B), but the damage was done.

A more decentralised approach

… and today

These days, vendors avoid the ESB label. In the current market, everything has to be distributed and flexible, while the ESB is seen as a heavy, monolithic and inflexible backbone.

These last years, the offering moved towards a completely distributed approach, where applications are seen as a collection of fine-grained, autonomous services, communicating through lightweight protocols and offering more flexibility and scalability: microservices (C).

Centralized, decentralized, distributed

The flexibility of microservices comes with an even bigger challenge regarding service-to-service communication.

As the number of microservices grows, the death star anti-pattern lurks around. Service meshes were developed to address a subset of the inter-service communication problems in a totally distributed manner.

Distribution can be challenging

But wait, how do we expose all those microservices’ public interfaces to the outside world? Through an API gateway. Centralized!

And how can we address the API consumers’ variety? Through BFF: Back-end For Front-end. Decentralized! Taking that perspective, it seems that not everything is meant to be distributed.

SOA and MSA: a clash?

Due to the explosion in mobile device adoption, the rise of Internet business models, and SOAgility, companies have the opportunity to develop new customer and partner channels – and embrace the potential revenue growth that comes with them – through the API economy. Customer-facing and partner-facing engagement systems are the first to be impacted by the need for a dynamic integration style – and therefore the first where it makes sense to start working on.

At the same time, organisations that have invested significantly in EAI or ESB technologies are unlikely to replace those technologies with microservices in the blink of an eye. These tools will continue to play a critical role in enabling organisations to integrate their (legacy) systems (such as ERP and mainframe) and applications effectively for quite some time to come.

These technologies are still well suited to manage the integration of a few closely-aligned teams working with a predictable technology stack. In this sense, they are effective application integration platforms rather than something that can work across a wide and diverse enterprise.

Hybrid integration needed

As a conclusion, if the one-style-fits-all architecture is not yet conceivable, both architecture types will inevitably exist together. But then again, the microservice architecture approach offers a good opportunity to try out new business models and at the same time assess the existing ones.

The new landscape encourages reflection. Which parts of the environment do you still want to or have to operate centrally? Mission-critical core services are changing rather infrequently, indeed. But still, why wouldn’t you want to improve their actual set-up?

And of course, your IT environment has more than mission-critical core services alone. Where does it make sense to disrupt the existing models, put a fail-fast strategy in place and create so-called edge services?

Questions? Don’t hesitate to get in touch. Aprico develops and implements innovative ICT solutions that improve productivity, efficiency, and profitability, applying cutting-edge expertise and perfectly mastering both the technological and business aspects of clients in all industries. Aprico enables clients to be more efficient and to take up technological challenges, including SOA and MSA.